Our programs

RAISE: Responsible AI Adoption for Social Impact

Canada’s first national program for nonprofit AI adoption, backed by $1.3M federal co-investment through DIGITAL supercluster, delivered in partnership with Creative Destruction Lab and The Dais at Toronto Metropolitan University.

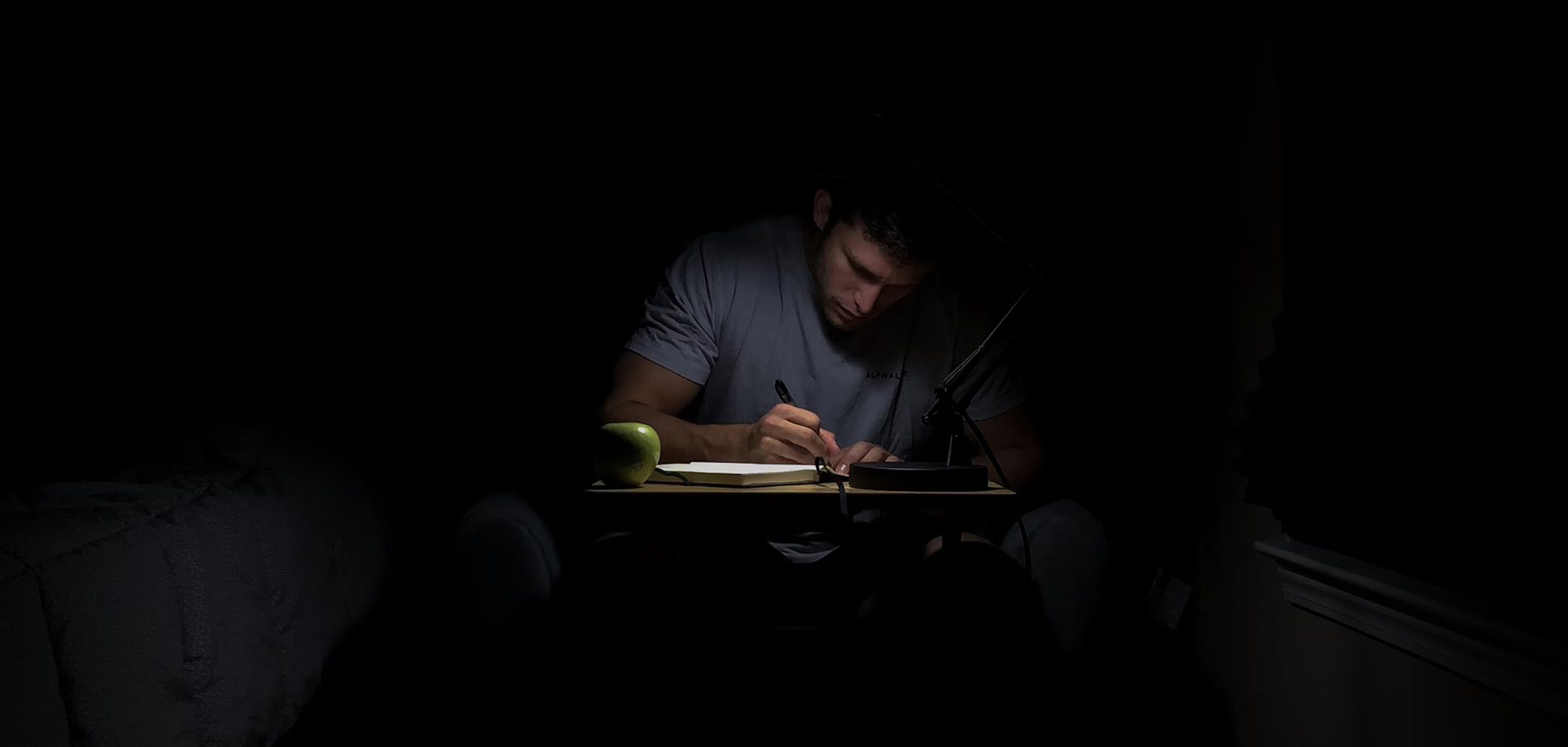

AI in Production Mentorship

Closing the apprenticeship gap in AI education, we have 200+ students building open source AI projects with experienced practitioners as mentors, building AI in Production skills with human-centered implementation defaults. Partnership with University of Toronto and Northeastern University.

Demos are coming in March 2026.

AI Tinkerers Community

Running Canada’s largest AI/ML builders community with 4,300+ members. Monthly demo nights featuring 300+ production AI projects, hackathons, and technical workshops. Part of 200+ city global network, with support from Shopify, Google, Intel AI, Mozilla, Auth0, Accenture, Cohere, and more.